What Is MCP (Model Context Protocol) and Why It’s Key to AI Agent Development

June 3, 2025

As large language models (LLMs) continue to shape the future of software, a critical gap remains: how can we reliably connect these models to external tools, APIs, and workflows? Traditional methods are often brittle, inconsistent, and hard to maintain. The Model Context Protocol (MCP) proposes a compelling answer by offering a standardized way for LLMs to understand and use external capabilities.

What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open standard designed to connect AI assistants to external tools, APIs, and data sources, including content repositories, business systems, and developer environments.

Originally introduced by Anthropic in 2024, MCP provides a universal framework that enables developers to define how tools and data can be exposed in a structured, machine-readable way. This allows LLMs to access and interact with those resources safely, consistently, and without relying solely on prompt engineering.

Rather than patching together bespoke integrations, MCP formalizes how capabilities are described so that models can understand what a tool does, what inputs it expects, and when it should be used.

Why MCP Matters

Most current implementations of tool use in LLMs are handcrafted: developers manually create wrappers, instruct models through fragile prompts, or hardcode integration logic. These approaches are error-prone and difficult to maintain.

MCP changes that by offering:

- Interoperability: Tool definitions built with MCP can be reused across different agents, models, or platforms.

- Reusability: Structured tool cards make it easy to share, update, and maintain integrations.

- Security and clarity: By clearly defining inputs, outputs, and usage context, MCP reduces the risks of misuse or model confusion.

In short, MCP turns tool use into a predictable, composable interface between models and external systems.

How MCP Works

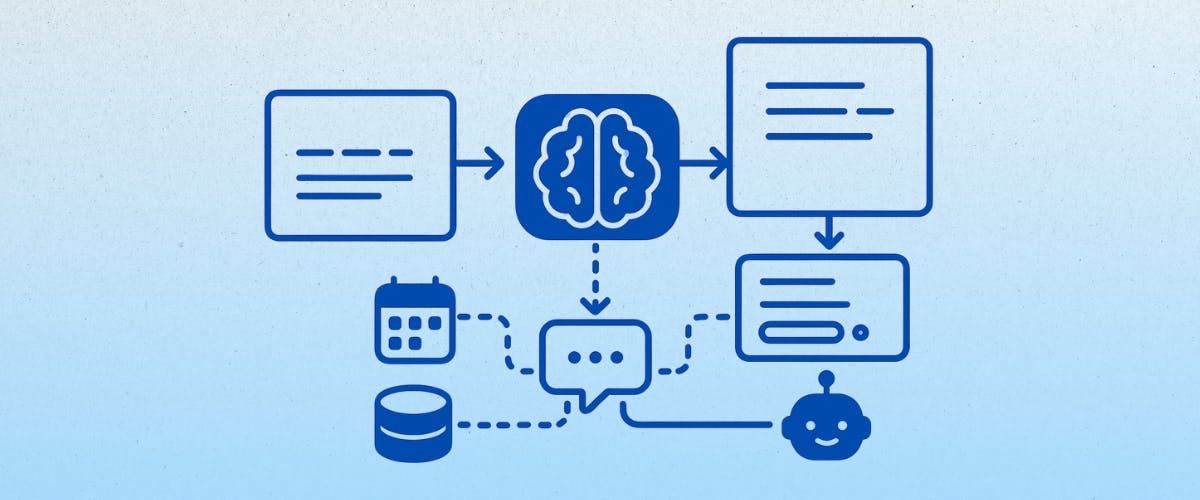

MCP creates a bridge between AI assistants and external systems by establishing a standard way for them to communicate. Think of it as a universal translator that helps AI models understand what external tools can do and how to use them properly.

The protocol defines three main types of capabilities that external systems can offer:

Core MCP Capabilities

Tools: Executable functions that perform specific actions, such as sending emails, querying databases, or calling APIs. Each tool is defined with a clear schema specifying its parameters, expected inputs, and return formats using JSON Schema.

Resources: Data sources that can be read by the AI assistant, including files, database contents, API responses, or any other information repositories. Resources provide structured access to external data without requiring custom integration code.

Prompts: Reusable prompt templates with configurable parameters that help standardize common interaction patterns and ensure consistent behavior across different use cases.

How It Works in Practice

When an AI assistant connects to an external system through MCP, it first asks: "What can you do for me?" The system responds with a menu of available capabilities, each described in a way the AI can understand, which typically includes:

Name and description: Human-readable and model-readable metadata.

Inputs and parameters: Structured fields detailing the expected input format.

Function signature: Defines how the tool should be invoked.

Contextual information: Optional elements that help the model understand when and why to use the tool.

For example, an email system might tell the AI:

{

"name": "send_email",

"description": "Send an email message",

"inputSchema": {

"type": "object",

"properties": {

"to": {"type": "string", "format": "email"},

"subject": {"type": "string"},

"body": {"type": "string"}

},

"required": ["to", "subject", "body"]

}

}

The AI assistant can then utilize this tool by providing the required information, including the recipient's email address, subject line, and message body. The email system handles sending the actual email and reports back whether it succeeded.

This standardized approach means the AI doesn't need custom instructions for every different tool or system. Instead, it can understand any MCP-compatible capability by reading its structured description, making integrations more reliable and easier to maintain.

MCP vs. RAG vs. AI Agents

MCP is often compared to Retrieval-Augmented Generation (RAG) and other agent architectures, but each has a distinct purpose:

- RAG focuses on enriching model output with external knowledge sources.

- MCP focuses on enabling models to take action using structured tools.

- AI agents are systems that often combine both techniques to reason and operate autonomously.

While RAG improves the quality of answers, MCP empowers action. And when used together, they create agents that are both informed and capable.

The Road Ahead

The adoption of MCP is still in its early days, but the momentum is real. As more platforms support MCP-compatible tool cards and schemas, a new layer of AI infrastructure is emerging, one where models can plug into real-world systems with minimal friction.

Still, challenges remain. Governance, versioning, and discoverability of tools will be critical to MCP’s success. Community standards and tooling will need to evolve alongside the protocol.

But if the early signs are any indication, MCP could be the key to scaling LLM-based systems from isolated chatbots to collaborative agents embedded across enterprise workflows.

Final Thoughts

Model Context Protocol invites us to think of models not just as text generators, but as agents capable of structured interaction with the world around them.

For organizations building intelligent systems, MCP offers a path to cleaner architectures, safer execution, and more reliable outcomes. As the AI ecosystem matures, MCP may well become a key part of how we connect intelligence to impact.

Our website uses cookies. Please note that by continuing to use our website, you are consenting to the use of these cookies. For more information, please refer to our Privacy policy.